Teaching Robots to Act

Teaching Robots to Act

Teaching Robots to Act

Somewhere in a future not too far from now, humans may open their homes to robots who can carry groceries or wash dishes on demand. Such technology isn’t yet available, but one engineering clinic project is laying the groundwork to make this sci-fi vision a reality.

"The great thing about language models is we can communicate with them. You don’t need to be an expert to talk to language models. In most robotics, you need to build it for a certain use case and then you’ll need an expert technician who’s been trained to use that robot to be able to have it do anything useful... This is essentially the start of looking in that direction of robotics." — Chaz Allegra, machine learning researcher and MS student at Rowan University

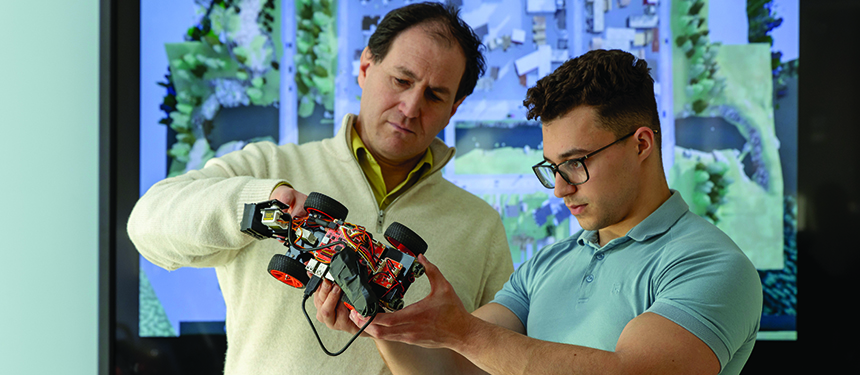

Robi Polikar, Ph.D., a department head and professor in the Department of Electrical and Computer Engineering, and student Chaz Allegra worked to train language models, like ChatGPT, to make a physical action on command. In this research, Allegra and Polikar were able to verbally instruct robot cars to make specific movements, like turning left or driving straight.

To do so, they developed a method called Action Question Answering (AQA). Since the language model already has a grasp of English but does not know the meaning of the words, researchers were able to assign specific movements to corresponding phrases — essentially, translating words into physical movements. Before the robot can even complete actions, it must be taught what those actions mean. Allegra and Polikar taught the language model 45,000 action-description pairs.

Then, the robotic cars could be trained to perform those actions on command. Every time the robot hears an instruction like “stop” or “turn right,” it will react in kind. The process works in reverse, too: Researchers can present the language model with an action and ask it “What am I doing?” and it will respond with the correct action.

In a simulation scenario, the robotic car would listen to step-by-step driving instructions from a navigation system: turning right when the navigation says so, and so on.

This process can be applied to any robotics application in order to make these devices as universally useful as possible. Currently, robots have been programmed to perform one specific function. Through AQA programming, robots would not have to be programmed for every task and instead will learn over time how to perform and how to associate that task with a particular phrase. Think of it as if an Alexa-enabled device had a body and could perform physical actions.

Moving forward, Allegra is moving away from driving-based functions to more generalized robotic actions: home chores and tasks.

Allegra is now pursuing his master’s at Rowan and is in the process of writing papers for submission to academic journals on this topic and others.